🐻 Papercubs go to UK Speech 2022

For the first in-person UK Speech Conference in 3 years, we were lucky enough to be able to attend with most of our machine learning team as both researchers and sponsors! The conference managed to pack a whole host of activities into just 2 days. So suffice it to say, there were more than a few highlights. Some of the most memorable included:

- Excellent key notes from Prof Namoi Harte, Dr Jennifer Williams, and Dr Joanne Cleland on multimodality in speech, speech privacy, and developments in ultrasound imaging for speech therapy respectively.

- Maximum engagement time with fellow researchers during the 3 different poster sessions.

- An excellent social that ticked all 3 big Ds — drinks, dinner, dancing! (We found out that one of us is really into ceilidhs)

- Clear skies and sunshine over Edinburgh for a quick trip up Arthur’s seat

For us, the conference was both a great chance to meet and re-connect with our fellow speech enthusiasts as well as engage the community with the questions that we’ve been grappling with over the past year. We were lucky enough to have the opportunity for Tian to present a poster on our work with controllable TTS and for Zack to share some insights around dubbing evaluation in an oral session. Here’s what you missed!

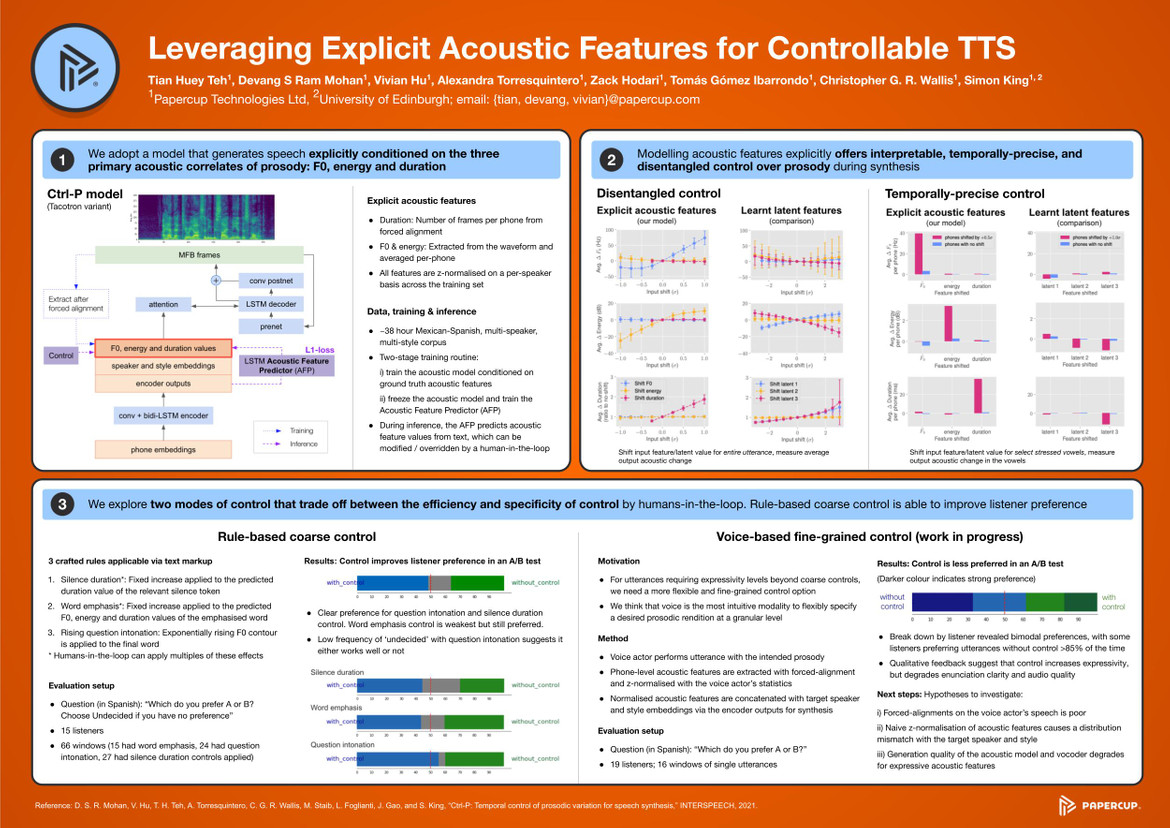

Leveraging explicit acoustic features for controllable TTS

At Papercup, we aim to make the world’s videos watchable in any language by leveraging human-in-the-loop assisted neural TTS to drive our AI-powered dubbing systems. As you can imagine, synthesising speech with prosody that is appropriate for our content is a major concern for us.

Last year at Interspeech, we published a paper describing Ctrl-P, a model that generates speech explicitly conditioned on three primary acoustic correlates of prosody: F0, energy, and duration. We found that modelling acoustic features explicitly offers interpretable, temporally-precise, and disentangled control over prosody during synthesis. The controllability that Ctrl-P offers gives us a couple different ways of achieving an appropriate target rendition of an utterance, and that’s precisely the kind of research we wanted to discuss with the rest of the community.

Tian presented the work we’ve been doing around exploring two different possible modes of control by humans-in-the-loop. One allows for coarse control using simple hand-crafted rules that can be applied via text markup. These rules enable some basic but salient prosodic effects like adjusting silence or pause durations, adding word emphasis, and applying question intonation. In a simple A/B test, we showed that these rules were able to improve listener preference.

However, this level of granularity doesn’t quite give us control over many of speech’s nuances and subtleties. For that, we investigated an alternative approach for fine-grained control: using voice directly! Our intuition is that the voice is the most intuitive modality to flexibly specify a desired prosodic rendition at a granular level. In this mode, a human-in-the-loop performs the utterance with the intended prosody. We extract the aforementioned acoustic features from the speech and use those values to drive the model instead of predicted ones. While qualitative feedback suggests this results in increased expressivity, enunciation clarity and audio quality may be also degraded, so there’s definitely more to investigate here! For more detailed insights, here’s a peak at the poster.

Luckily, it seems like we were in good company at UK Speech, with a number of other researchers doing similar interesting work in the controllability space. Matthew Aylett and the team at CereProc presented a voice-driven puppetry system, and Gustavo Beck and his collaborators at the KTH Royal Institute of Technology brought formant synthesis back with a proof-of-concept investigating the use of formants for neural TTS control. It’s great to see how others have also attempted to leverage and build upon the value of controllability in neural models, so we’ll definitely be watching this space!

Evaluating watchability for video localisation

Zack gave a talk on the difficulty of evaluating watchability for AI video localisation. By watchability, we mean all aspects that contribute to the end-viewer experience, including things like translation quality, expressivity of the synthesised speech, and voice selection. To demonstrate why this is difficult, take the following example from his presentation.

Listen to the following samples (before watching the video below!) and try to determine which is more natural, more appropriate, or which you prefer the most.

Now watch the video below.

Has your opinion changed on which sample is better?

When building any watchability evaluation, we have to take many variables into account: how the context determines appropriateness of the synthesised speech, the purpose of the content, how long evaluators are willing to watch a sample, the list goes on.

In his talk, Zack detailed why this evaluation is important for the video localisation we are doing at Papercup: both for us to understand the impact of watchability on how viewers experience our dubbed videos, and to identify areas for our system to improve.

Ultimately, this evaluation is largely unresolved, and it’s an exciting problem for us to continue researching.

As with controllability, we were keen to see other work in the dubbing space. In particular, Protima Nomo Sudro, Anton Ragni and Thomas Hain’s work on using voice conversion to transform adult speech into child speech for dubbing. What a cool concept!

And that’s another conference under our belts! Big shout out to the organisers Catherine Lai and Peter Bell for putting together such a great event. Looking forward to seeing everyone again next year :)

Subscribe to the blog

Receive all the latest posts right into your inbox