Sheffield SLT CDT Conference 2022

Sheffield’s CDT in Speech and Language Technologies recently hosted their second annual conference. This was the first year it was held in-person due to covid. We were so glad to be able to attend again and had an even better time being able to attend in-person! It was refreshing to meet so many new people face-to-face.

Our favourite aspect of the CDT and the conference was the sheer breadth of topics covered. It was so informative to learn about so many new topics. We really enjoyed getting a chance to hear from each of the CDT cohorts about some of their group projects and research tracks, and it was great chatting with them 1-1 during the poster sessions. Some other key features included an interesting array of keynote speakers as well as an excellent panel on the future of speech and language technologies. It was a great opportunity to hear from experienced academic and industry researchers, including our very own Catherine Breslin!

In this blog post we’d like to highlight some of the keynotes, talks, and posters that stood out most to us.

Keynotes

Research Challenges in Devising the Next Generation Information Retrieval and Access Systems

The conference kicked off to a great start with a keynote on information retrieval from UCL Professor and Amazon Scholar, Emine Yilmaz. Emine motivated the importance of understanding the task users are attempting to conduct when searching for information. Say you search for “solicitor fees” on Google, the knowledge that you are attempting to understand what is involved in buying a house would help remove a lot of ambiguity and result in more relevant information. The approach Emine presented uses Bayesian Rose Trees to cluster queries into tasks and subtasks. With this representation you can model transitions between subtasks with each new query. Emine also covered topics related to correctness, bias, and relevance in online information, and the importance of annotation quality.

Large Scale Self/Semi-Supervised Learning for Speech Recognition

Dr. Bhuvana Ramabhadran, a research lead at Google, gave an excellent survey of many aspects of pre-trained ASR models. One of our key takeaways was how important diversity of data is for self-supervised learning. On this note, Bhuvana discussed a couple of methods to augment what data ASR models can be pre-trained on. In TTS4PreTrain, a TTS model was used to synthesise unspoken text, unlocking a wide range of additional data to be used during pre-training. Similarly, mSLAM (multilingual Speech and Language Model) uses both human speech and unspoken text. However, instead of relying on TTS to synthesise the unspoken text, mSLAM optimises different tasks depending on the type of data. It is first trained on un-transcribed speech, then on transcribed speech, and finally on unspoken text. Thus, mSLAM can learn from more types of input and more variety of data.

Automatic Speech Recognition Research at Meta

The last keynote of the first day was from from Dr. Duc Le of Meta AI Speech. He covered several ASR research projects that they’ve tackled recently, including a couple of pieces of work that really impressed us with their scale. Their Kaizen model uses only 10 hours of supervised data and 75,000 hours of unsupervised data to match the performance with 650 hours of supervised data. Their scaling ASR paper uses 4.5 million hours of speech from various sources and focuses on data filtering/cleaning and model scaling. The model also works better for zero-shot tasks, including aphasic speech. Duc also presented some interesting grapheme-to-phoneme work that aims to improve on the recognition of proper nouns such as names. Finally, Duc talked briefly about Project Aria, AR glasses intended for research data collection, and Ego4D, a 4,000 hour dataset of 1st person video data.

Speech anonymisation

Dr. Emmanuel Vincent, Senior Research Scientist & Head of Science at Inria, gave a comprehensive summary of speech anonymisation. One interesting distinction was between voice anonymisation and verbal anonymisation. The former involves removing speaker identity, while the latter involves replacing or cutting out text/audio that might degrade privacy, such as names and locations. The VoicePrivacy challenge works on voice anonymisation. Emmanuel covered a few different methods from this work. Simple techniques, such as pitch shifting and VTLN fail against a simple attacker. Methods that try to replace the user’s voice with a random other voice using voice conversion are more robust to attacks. A simple approach for this is to perform TTS with the ASR transcript, pitch contour, and a target speaker’s x-vector. Interestingly, they found it was best to select target speakers with a random gender and from a dense region of x-vector space! Unfortunately, if the attacker can narrow down the set of possible speakers, the level of privacy isn’t nearly as good. Emmanuel also discussed two other methods for voice anonymisation: adversarial learning for disentanglement, and differentiable privacy by noising the pitch and phones. Finally, he discussed one method for verbal anonymisation, using NER to find personal information.

Thoughts about what it means to be human

The last keynote, and the last session of the conference, was from Professor of Electrical and Computer Engineering at the University of Illinois Mark Hasegawa-Johnson. Mark discussed three very interesting topics: multi-sensor data of families in the home, ASR for new and/or low-resource languages, and ASR for people with disabilities. The possibilities around the first topic seemed very impactful. Mark presented data collection work of health sensor data and voice data for child-rearing families. The dataset includes detailed annotations of who is speaking and to whom. Analysis of this data shows clustering based on audio characteristics of the speakers. When coloured by the speaker annotations it was possible to understand high level trends for each family. This might include one parent talking more to one child than another, or a child interacting differently with each person. There seems to be amazing value in further analysis of this data, especially when paired with the health signals. It may surface systematic biases across families from different backgrounds and the impact on important health factors such as stress, which is detectable from changes in the modulation of heart rate.

Talks

Research spotlights — ASR for atypical speech

Like the keynotes, the research spotlights covered a wide range of topics.

A number of speakers highlighted the need for improved approaches in ASR to handling atypical speech, including sung or dysarthric speech.

Speech analytics for detecting neurological conditions in Global English

These systems, which often make up larger ML pipelines, struggle not only with atypical speech, but also simply with the speech of non-native speakers. Sam Hollands’ talk about detecting dementia in native and non-native English speakers underscored how poor ASR quality for L2 English speech may bias automatic diagnostics built upon transcriptions. The bias in neurological diagnostics is based on language background which can be harmful to underrepresented groups. Moreover, traditional metrics for ASR like WER may not be sufficient for evaluating the downstream impact on automatic dementia detection, since they don’t convey whether or not the meaning of the speech has been preserved.

ASR for sung speech

Dr. Gerardo Roa Dabike presented his PhD work on ASR for sung speech. He pointed out some fundamental acoustic differences between typical speech and sung speech, such as increase in pitch range and energy, phone elongation, and changes in the formants. As a result, incorporating musically-motivated features like pitch and voicing degree were able to improve recognition performance. However, it wasn’t enough to merely address the differences in sung and typical speech. These ASR systems also need to be robust to accompanied sung speech, i.e. audio with speech and instrumental backing tracks! Gerardo used time-domain source separation networks to separate the speech from the music and adapted models trained on clean sung speech to the separated distorted signals. This lead to improved ASR performance on accompanied sung speech.

Research spotlights — Cross-lingual research

In the NLP space, there was much to be said about one of Papercup’s favourite challenges — translating between languages!

Representation Learning for Relational and Cross-Lingual Data

Xutan Peng spoke about methods for learning cross-lingual word embeddings (CLWEs). One popular approach, CLWE alignment, typically involves learning mappings between independently trained monolingual word embeddings in order to translate one language’s embeddings into that of another. These mappings are commonly assumed to be linear based purely on empirical findings, despite the fact that languages have different structures/etymological distances from each other and that the learned word embedding space may be highly dependent on training conditions. Xutan’s work looks more closely at the theoretical basis for the conditions behind this linearity assumption and suggests that the preservation of analogies between word embeddings implies a linear CLWE mapping between the two languages (see his paper for a more in-depth explanation of this big claim!) Based on this finding, he further investigates how this might be extended to cross-lingual knowledge graph alignment.

Controlling Extra-Textual Attributes about Dialogue Participants

Meanwhile, in the same way that TTS is a one-to-many problem, machine translation suffers from issues around translation diversity for similar reasons. A single source sentence might have many possible translations depending on the variability of the target language. Sometimes this variability surfaces as translation bias, e.g. doctor translated as a masculine form and nurse as a feminine form. However, as Sebastian Vincent points out, this bias might be avoided and some of the variability accounted for by providing metadata to the model, e.g. formality, number of speakers, gender (among other speaker characteristics), in order to achieve grammatical agreement in translation as opposed to behavioural. Not only does this lead to increased BLEU scores, it also allows for some degree of control over the faithfulness of the resulting translation. In order to show this, they’ve also collected an English-Polish parallel corpus of TV dialogue annotated with a number of these extra-textual attributes!

Challenges spotlight

We also got a chance to see how the CDT candidates have been applying their skills and perspectives to various speech and NLP tasks via organised challenges. They tackled challenges like DialogSum, which involved generating high level text summaries of face-to-face spoken dialogues, and the Clarity Prediction Challenge, a speech intelligibility-focused task designed to improve hearing-aid model signal processing for hearing impaired listeners. One group took on the Data-Centric AI Competition, which focused solely on data augmentation and quality. Given the often model-centric approach to ML, it was refreshing to see a task formulated specifically around data using a fixed model architecture! In their approach to improving the training data, the students looked into data cleaning, existing augmentation techniques like RandAugment, and generative modeling approaches like FFJORD to create synthetic training data.

However, the students weren’t just limited to participating in challenges, Edward Gow-Smith even co-organised a shared task for SemEval 2022 — Multilingual Idiomaticity Detection and Sentence Embedding. This challenged focused on potentially idiomatic multiword expressions (MWE) across English, Portuguese, and Galician. For the task of idiomaticity detection, participants explored both zero-shot and one-shot settings. Interestingly, the approaches that worked well in one setting often did poorly in the other. Namely, zero-shot models tended to perform better with regularisation and data augmentation, while one-shot models benefited more from larger models and ensemble systems.

Posters

As much as we’ve enjoyed the accessibility of online and hybrid conferences this past year, there’s something about being able to casually browse a poster session in-person and get stuck into conversation with a researcher about their work that we really appreciate. Of all the interesting posters we got a chance to see, these were some of our favorites!

Investigating the effects of prosody on uncertainty in speech-to-speech translation

Among the many varied areas in speech and NLP spanned by the CDT candidates, Kyle Reed’s poster was probably the most directly relevant to our research, and we really enjoyed being able to have a longer discussion on the motivations and initial findings behind his work. Although it’s often the tendency of the automation industry to cut out the middle man, the current state of direct speech-to-speech (S2S) translation systems has not yet surpassed cascaded ones comprised of ASR >> MT >> TTS. As a result, Kyle’s early work aims to first answer the question: Is there really any point to be doing direct S2S translation? One key argument is that important information is lost at each stage of a cascaded system, which results in compounding errors down the line. In the case of the first part of the S2S pipeline, Kyle shows that MT systems do benefit from additional text context, but this improvement tails off at some point. Why? Perhaps due to the fact that prosody can contain semantic information that is not present in text. As a result, translating just the transcribed source speech into the target language results in poorer translation quality. The next step is to show that using the source speech and leveraging the prosody can improve translation. Looking forward to seeing where this research goes next!

Voice conversion for dubbing adult to child speech

Protima Nomo Sudro presented research looking at challenges around child characters in traditional dubbing. Protima suggests that when recording with child actors is not an option, voice conversion can be used to transform adult voice actors into child speech. Unfortunately, no adult-child paired data exists. To conduct her research, Protima is investigating a few solutions to this: the source can be generated using TTS or recorded using adult voice actors, and the target can be taken from existing children’s speech data or recorded using child voice actors. Preliminary objective results were shown using the existing children’s speech data. However, we are very excited to see the results once data collection with the child voice actors is complete!

How does the pre-training objective affect what large language models learn about linguistic properties?

With all the buzz around large pre-trained language models (LLMs) and their successful application to NLP tasks, Ahmed Alajrami’s research seeks to investigate the importance of the pre-training objective on the linguistic information that gets encoded in their learned representations. To do this, they propose training BERT with 5 different objectives — 2 linguistically motivated and 3 non-linguistically motivated, including a completely random one (for more details see the paper here) — and using different downstream tasks to assess the encoded linguistic characteristics of each model. Surprisingly, the differences between the learned representations appear to be surprisingly small, with the model trained on the completely random objective even performing on par with the traditional MLM objective on some tasks. This suggests that some tasks might be misleading or provide poor signal on the quality of these models.

Improving tokenisation by alternative treatment of spaces

Another poster in the area of large language models, from Edward Gow-Smith, discussed the impact of tokenisers for complex language, such as morphologically complex words and compound noun phrases. Existing work has identified that tokenisers sometimes have a poor understanding of morphologically complex words. Edward suggests that the treatment of whitespace is a leading cause of this — current tokenisers treat whitespace as a character. This is best illustrated with an example: “ beatable” tokenises to <_beat> <able>, while “ unbeatable” tokenises to <_un> <beat> <able>. The underscore represents the leading whitespace, and this means that <_beat> and <beat> correspond to different tokens. By always treating whitespace as its own character this shortcoming can be avoided. Further, with more intervention in the tokeniser, common phrases or compound nouns could be forcefully added to the vocabulary. This could allow for better understanding of content with complex language, potentially including idioms.

Understanding algorithmic bias in ASR

Nina Markl’s work focuses on the causes, measurement, and impact of bias in speech technology. Her poster presented discussion around this topic for ASR, along with a study on several ASR APIs (Mozilla, Amazon, and Google). Nina’s results demonstrated that ASR is biased against non-native speakers, speakers from the North and Northeast of England, and speakers from Northern Ireland. In addition, disfluencies, morphology, and linguistic variation common in conversational speech lead to poorer performance. These kinds of bias can impact different groups of the population and can simplify or modify the intended message of the speaker. Knowledge of these biases and an understanding of their impact should lead to more awareness and will hopefully move the industry towards fixing such issues.

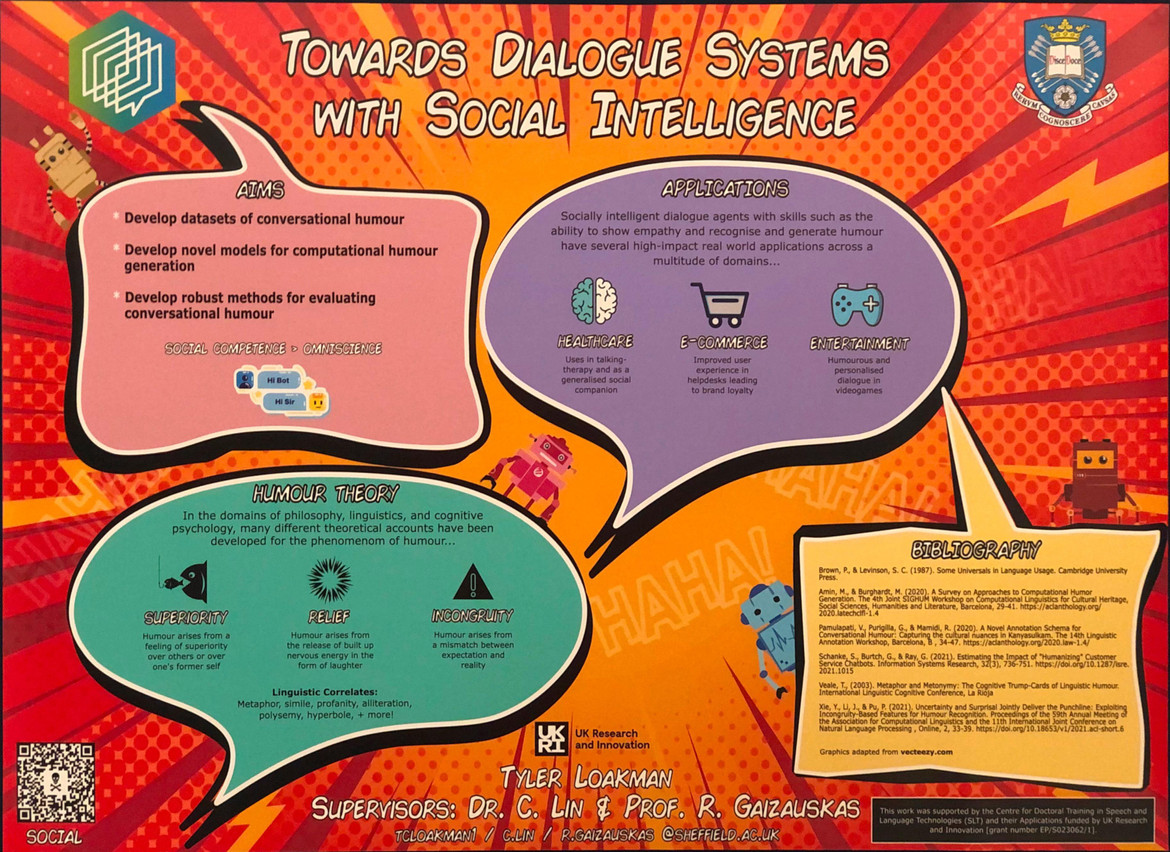

Towards dialogue systems with social intelligence

While many of the first CDT candidates have only just begun their research projects, it was awesome to be able to walk around and see so many different areas of research in the field being so well represented. But it wasn’t just the topics that caught our eye, we also had to give props to some of the incredibly well-designed posters the students had made. As any researcher knows, putting together an easy-to-consume technical poster can be a real challenge, so we really enjoyed stopping to checkout posters like Tyler Loakman’s one on humour in dialogue systems! His work will focus on generating conversational humour using different theories of humour as a basis to structure generation.

And that’s a wrap! Thanks Sheffield for hosting another great conference!

Subscribe to the blog

Receive all the latest posts right into your inbox