Phonological Features for 0-shot Multilingual Speech Synthesis

So far, code-switching is only available in multilingual models

When working with video in multiple languages, something we often have to deal with is a phenomenon called “code-switching”. Code-switching is when an utterance in one language (say, Spanish) contains a word or phrase in another language (say, English). This may happen when, for example, the name of a foreign person, institution or place is used; or a specific technology, platform or product name. Standard, state-of-the art Text-To-Speech (TTS) models [1] trained on only one language are not naturally good at handling foreign words or phrases, although it’s been shown that multilingual models trained on both languages (the “utterance” language and the “code-switching” language) are able to cope with them [2, 3].

In this post, we present our approach of using phonological features as inputs to sequence-to-sequence TTS, which allows us to code-switch into languages for which no training data is available, including the automatic approximation of phonemes completely unseen in training.

Bytes are not really all you need

Advances in this area were made by Li and colleagues, who, in their 2019 ICASSP paper [4] claim that “Bytes are all you need”: Instead of characters, they rely on Unicode bytes as inputs to Tacotron 2. The good thing about Unicode bytes is that they are designed to represent characters in almost any language, so one could kind of see why they become the authors’ weapons of choice for training a multilingual TTS model, which is able to code-switch (although it switches the speaker along with the language). However, one important fact that the catchy title might distract from is that in addition to bytes as an input representation, really all you need is the right training data; ideally for every language you plan to code-switch into [2]. And that kind of data is only readily available for a mere fraction of the world’s 5000-7000 languages.

Nonetheless, speech has its own “bytes” so to speak: While phonemes are the minimal, discriminatory units of speech (similar to how characters are the minimal composing units of written language), phonological features [5] can be viewed as the “Unicode of speech”. They include things like voicing, place and manner of articulation, vowel height and frontness, and lexical stress. While Unicode byte vectors create a meaningful space for written text (in that characters from the same orthography have a smaller distance in byte-vector space), phonological feature (PF) vectors lie in a meaningful space of sound, where similar-sounding phonemes are closer together. This means that, unlike Unicode bytes, PFs can actually generalise to make an “educated guess” on what hasn’t been seen before.

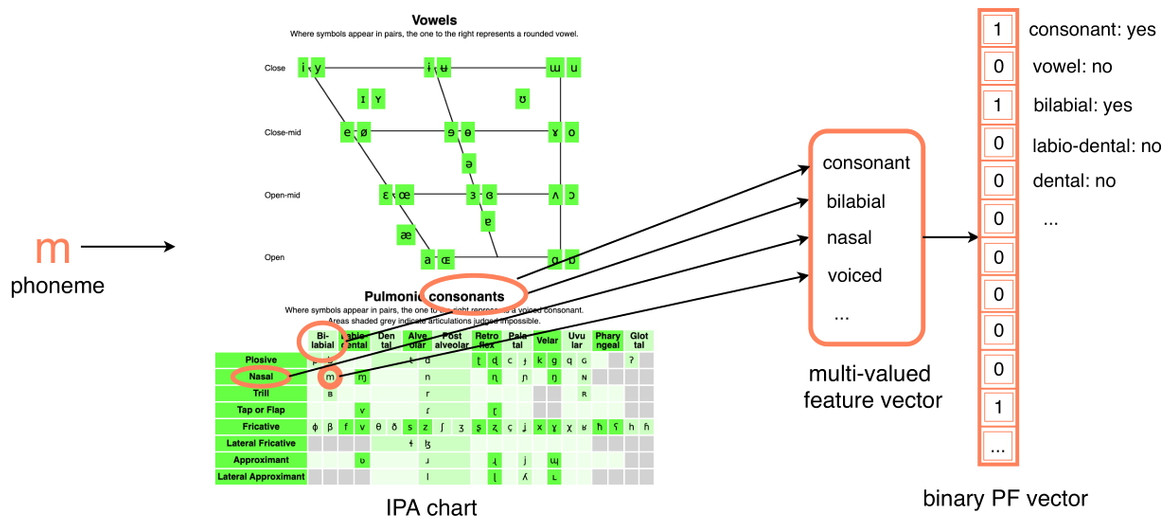

0-shot

In our research [6], we leverage this ability of PFs to generalise to create a “0-shot” TTS system in a new language, that is, without any training data from that language. Rather than phonemes, which are now often used in sequence-to-sequence TTS models (to train multilingual models [2], or improve general performance [7]), we feed a vector of PFs as inputs to the network trained to predict speech features (mel-filterbank features, MFBs). PFs are read from the IPA-chart and encoded into binary features, as shown below:

(For those who want to try this out at home or build on our research, resources for easily reproducing this mapping can be found in our supplementary materials. The sklearn OneHotEncoder is a useful tool for converting the resulting PFs into a binary feature vector.)

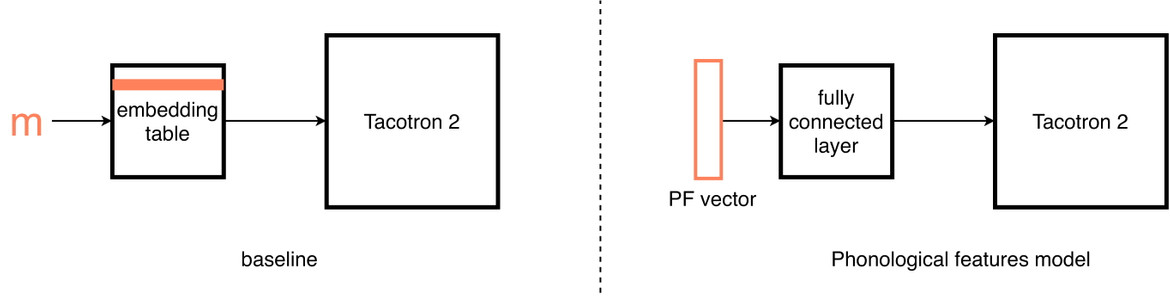

The resulting feature vector is fed through a linear feedforward layer to create a continuous representation space, similar to the embedding space of the typical sequence-to-sequence TTS architecture. The transformed vector is then fed as input to a Tacotron 2 model [1]. As a baseline, we compare this model to a Tacotron 2 that takes phonemes as input:

(One step I have actually left out here is that phonological transcriptions of each word have to be retrieved from a dictionary or rule-based pronunciation module. This is the case for the baseline as well as our proposed method.)

Both systems are trained on A) an open-source English dataset (VCTK) B) a multilingual dataset, composed of VCTK and some of our proprietary Spanish data (an alternative to reproduce this would be the open-source Spanish (Sharvard) corpus). For B, we include a language embedding as an additional input to the Tacotron 2 encoder (similar to [2]).

These models—trained on A) only English or B) Spanish and English—are then used to synthesise a completely new language. In our experiments, we used German as a new test language for practical reasons, which shouldn’t really distract from the fact that in applications, this method can be used on more low-resource languages instead. In all cases, we selected test sentences specifically to include phonemes never seen in training. While others have trained larger multilingual models on families of related languages, and managed to synthesise test sentences in a new language [3], to our knowledge, we were the first to try synthesising completely unseen sounds, marking the “true 0-shot case”.

Creating intelligible speech sounds in a new language - without training

To create new, unseen phonemes with our model trained on PFs, all we have to do is map the out-of-sample (OOS) phonemes to their corresponding feature vectors, and feed these to the network. We want to see if this approximation of new sounds is better than

- no approximation (that is, Does the network learn anything at all about generalising those features to produce new sounds?) and

- a manual expert-mapping approach (that is: Do PFs really allow us to produce more than just a mapping to the closest sound seen in training?)

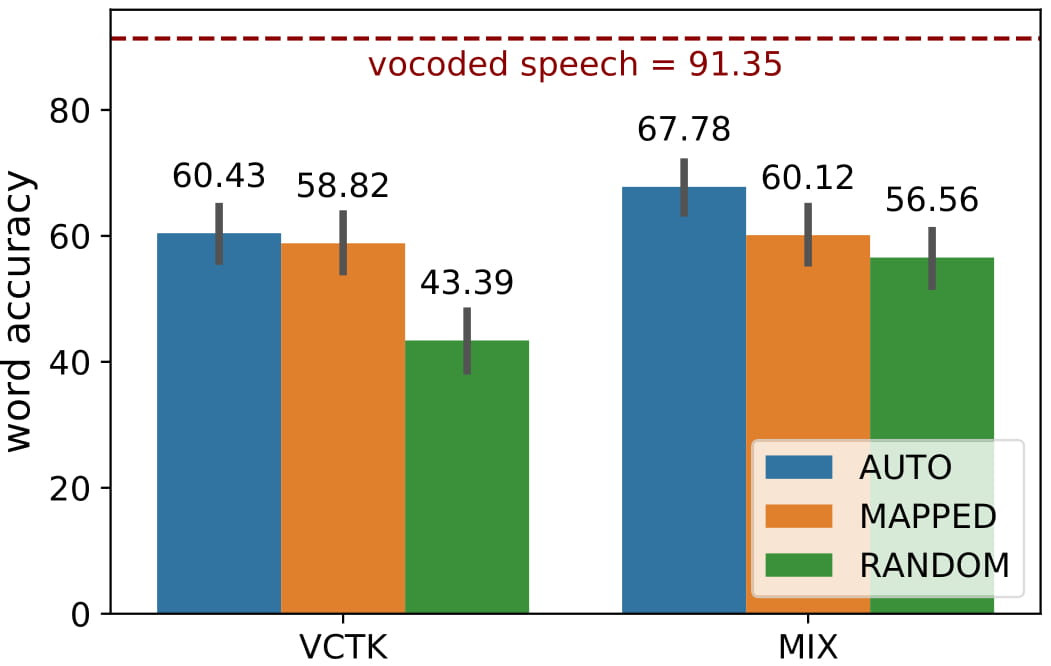

To answer these questions, we compare our method (which we call AUTO) to two baselines:

- RANDOM: choosing a random, untrained embedding to replace the unseen sounds with in the baseline model

- MAPPED: using the “linguistically closest” sound, according to an expert mapping

All 3 methods are used to synthesise German speech from our monolingual and multilingual models. Finally, we ask native speakers to transcribe the resulting German sentences, to assess how well they understand words containing varying numbers of unseen phonemes. The results are shown in this plot:

In both the monolingual (VCTK) and the multilingual (MIX) model, our PF model is able to significantly outperform the RANDOM baseline (p < 0.001), and match or outperform the MANUAL one. Interestingly, AUTO is significantly better (p < 0.05) than MANUAL in the multilingual model, but does not yield significantly different results from MANUAL in the monolingual one. There are a few possible explanations for this finding, but we believe that it is mainly due to the fact that in the VCTK model, some of the unseen sounds like [R] collapse to something non-sensible, like [G], which looks close in phonological feature space, but is not recognisable as being the targeted sound. In this case, the manual mapping to [ɹ], which does not share as many PFs, but a graphical symbol (and, perhaps historically, a more similar linguistic function) with [R], yields better results. Another potential hypothesis is that the larger overall phoneme inventory in the MIX model forces it to learn a richer representation space, making it generally better at generalising to new, unseen sounds.

Here is an example of an English sentence containing a German name synthesised with the different methods (sentence: “Albrecht Dürer, sometimes spelled in English as Durer, without umlaut, was a German painter.”; highlighted characters correspond to unseen phonemes):

With phonological features:

With a manual phoneme mapping:

With random embeddings:

More meaningful representation space

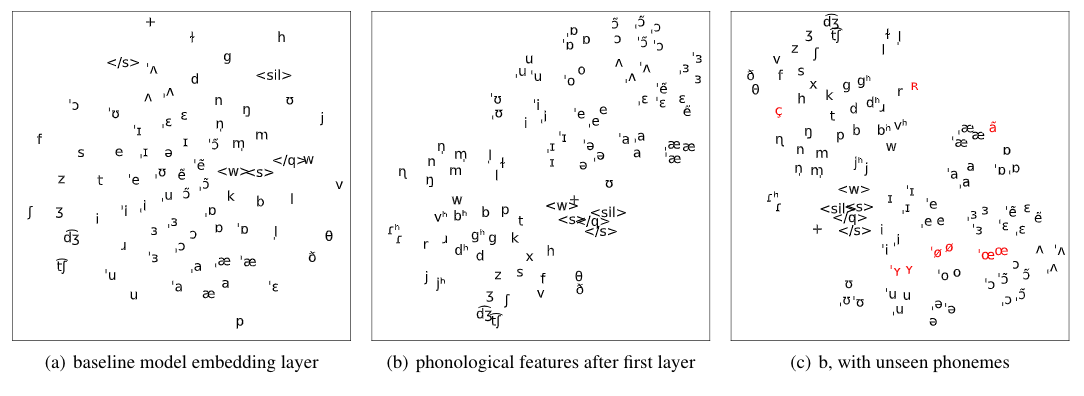

Finally, we also wanted to compare the representation space that our PF model was able to learn to the baseline. As shown in the t-SNE plots below, both representation spaces somewhat organise sounds according to phonetic similarity:

However, while phoneme embeddings in the baseline model are distributed uniformly across the embedding space, meaningful clusters emerge only in the PF model: symbols first group by broader category (vowels, consonants and additional symbols used for making word boundaries, silences etc.), and secondly by additional features within their respective clusters (e.g., within consonants: manner, then place and finally voicing). Furthermore, unseen phonemes automatically land in a phonetically appropriate area in the embedding space: for instance, [ʏ] is somewhere between [u] and [i], both of which it shares perceivable phonological features with.

All you really need is…

Don’t worry, we’re not going to conclude with another catchy twist on a great Beatles song! And the great thing about this kind of research is that - whatever it is, it’s never really all you need. In terms of bringing 0-shot TTS to the next level, we’re currently testing these results on various other languages. We’re also trying to work out which types and which number of additional training languages are especially beneficial for 0-shot TTS in a specific language. If you have other ideas, comments or contradictory results, please reach out to us!

More details can also be found in our paper, and additional samples on our samples page.

Acknowledgements

Thanks to Tian for being a driving force in the development of this research with me. Thanks to Doniyor for asking lots of questions about speech, language and phonological features, with which he inspired us to do this research. Thanks to Alexandra for the amazing editing work on this post.

References

[1] J. Shen, R. Pang, R. J. Weiss, M. Schuster, N. Jaitly, Z. Yang, Z. Chen, Y. Zhang, Y. Wang, R. Skerrv-Ryan et al., “Natural TTS synthesis by conditioning WaveNet on mel spectrogram predictions,” IEEE International Conference on Acoustics, 2018.

[2] Y. Zhang, R. J. Weiss, H. Zen, Y. Wu, Z. Chen, R. Skerry-Ryan, Y. Jia, A. Rosenberg, and B. Ramabhadran, “Learning to speak fluently in a foreign language: Multilingual speech synthesis and cross-language voice cloning,” INTERSPEECH, pp. 2080–2084, 2019.

[3] A. Gutkin and R. Sproat, “Areal and phylogenetic features for multilingual speech synthesis,” INTERSPEECH, pp. 2078–2082, 2017.

[4] B. Li, Y. Zhang, T. Sainath, Y. Wu, and W. Chan, “Bytes are all you need: End-to-end multilingual speech recognition and synthesis with bytes,” 2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 5621–5625, 2019.

[5] S. King and P. Taylor, “Detection of phonological features in continuous speech using neural networks,” Computer Speech & Language, vol. 14, no. 4, pp. 333–353, 2000.

[6] M. Staib, T. Teh, A. Torresquintero, D. Mohan, L. Fogliantis, R. Lenain and J. Gao, “Phonological Features for 0-shot Multilingual Speech Synthesis,” INTERSPEECH, 2020.

[7] J. Fong, J. Taylor, K. Richmond, and S. King, “A comparison between letters and phones as input to sequence-to-sequence models for speech synthesis,” 10th ISCA Speech Synthesis Workshop, pp. 223–227, 2019.

Subscribe to the blog

Receive all the latest posts right into your inbox